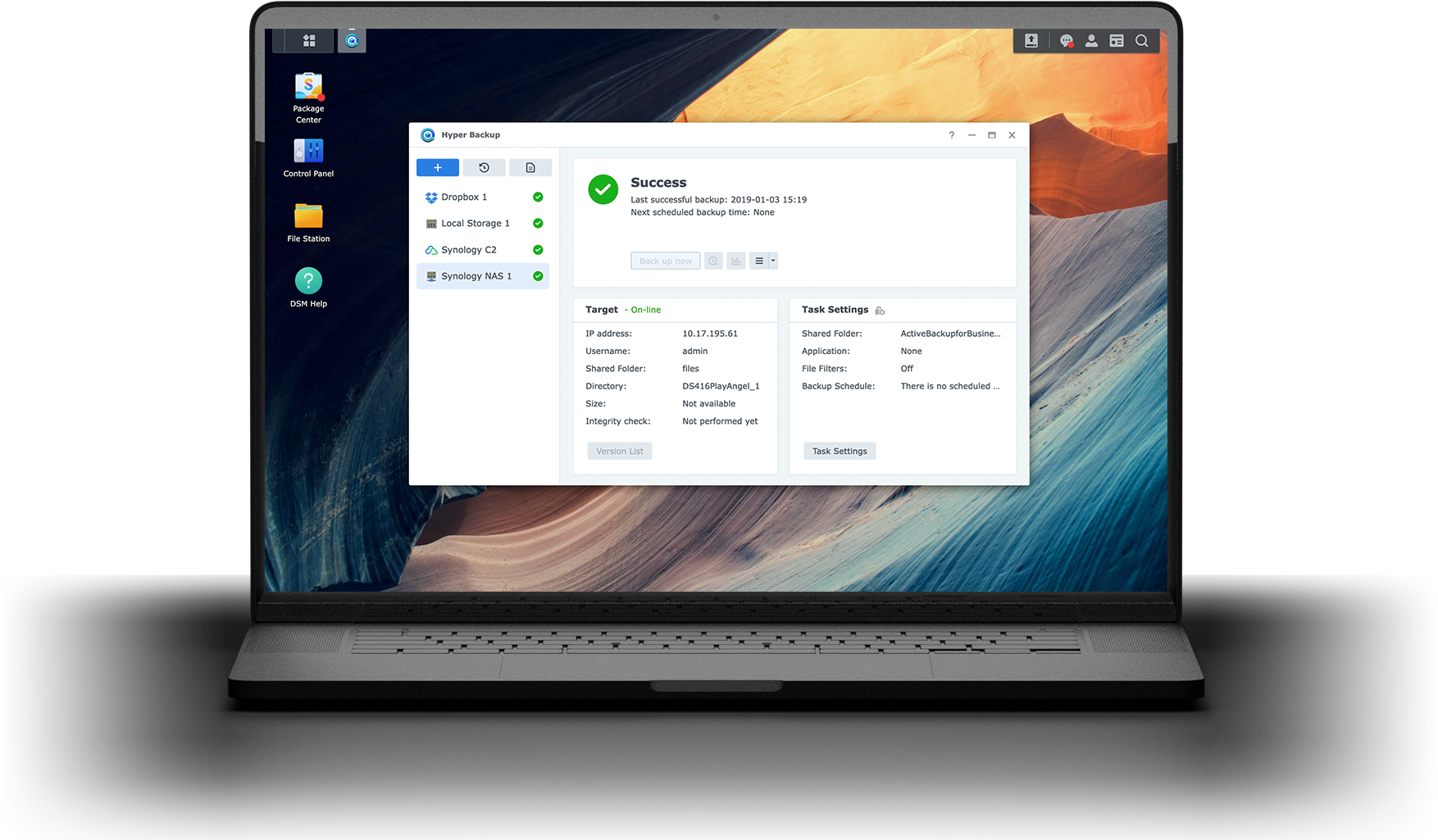

To put things in perspective, we should look at how the value of deduplication is measured between the two methods, which are the effectiveness of data reduction capabilities and maximum achievable deduplication ratios and overall impact on storage infrastructure cost and other data centre resources and functions, such as network bandwidth, data replication, and disaster recovery requirements. The two mainstream methods, per job deduplication and global deduplication, have long been debated over which methods serve businesses better. “When performing global deduplication on such backup strategy, global deduplication ratio calculated by solutions like Synology’s Active Backup for Business is often achieved using used disk size from the source side, thus giving a more accurate projection of the deduplication efficiency.

#Synology deduplicator full#

By using the same figure as above, with a single full backup file occupying 100GB, if perform together with incremental backup, the total storage space needed, in addition to the original full backup, would only contain files which have been altered.

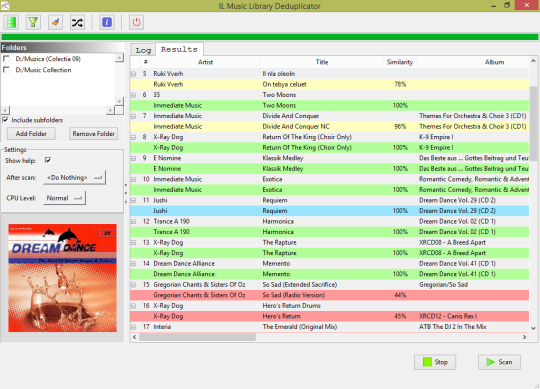

On the other hand, with global deduplication method, the backup task is performed across all physical and virtual environments. Methods that’s more accurate and serves businesses better

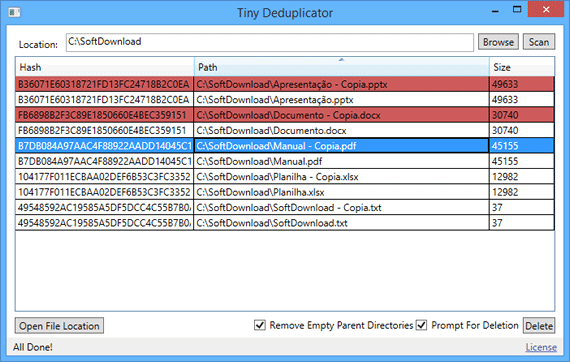

Synology’s Active Backup for Business provides built-in deduplication technology to greatly enhance data storage efficiency. Moreover, there have been cases of per task deduplication ratio being calculated by using total disk size from the source side, thus giving an illusion of higher deduplication rate. If backup is performed 10 times, the total of 1TB storage space will eventually be taken. Thus, if a single full backup occupied 100GB of the storage space, the deduplication task will only be performed on each backup task. Often when some vendors claim they offer data deduplication features, it is revealed that the deduplication do not deduplicate data between each task, not to mention devices. Hence, several assumptions need to be made so we may ultimately put things into perspective when comparing global deduplication to others. However, the effectiveness of data deduplication rate and ratios, and cost efficiency are sometimes difficult to compare and measure without a universal standard. One of the most commonly utilised deduplication method is global deduplication.

#Synology deduplicator driver#

And thus, a critical driver to a company’s business and data continuities is to not only have the IT managers to understand the differences between various deduplication methods, but also implement an agile yet cost-efficient data management platform that provides data deduplication capabilities. In other words, bad data is bad for business. At the macro level, bad data has estimated to cost the US more than $3 trillion per year. Gartner’s 2017 Data Quality Market Survey revealed that duplicated and poor data quality is costing organisations up to $15 million on average. Other than unstructured data, IT personnel need to take into account that the backup tasks that are frequently performed by copying and storing large data sets often leads to duplicated data, which in the long run would quickly lead to exponential data storage needs.Īccording to Gartner, by 2024, enterprises will triple their unstructured data stored as file or object storage from what they have in 2019 and 40% of I&O leaders will implement at least one of the hybrid cloud storage architectures.Īnd of course, the staggering amount of data that has been generated by businesses doesn’t come cheap. The unspoken truth for IT executives and leaderships wanting to demise businesses’ unstructured data has been extensively discussed.

0 kommentar(er)

0 kommentar(er)